A quick ask

Last Friday, I learned that my job had been eliminated.

I’m looking for a new Product Role.

Here’s what I’m looking for:

-

mid-stage venture-backed companies wanting to accelerate their process

-

public companies that want to speed up innovation

-

companies focused on automation and integration that need a Senior PM

-

or folks who need a PM generalist as a fractional resource

If you want to work with me, schedule a discovery call, and let’s talk!

A few (well, maybe more than a few) years ago, when you were working on a technical problem you’d start by considering the problem, breaking it into tasks, and then researching the individual pieces on sites like Slack Overflow.

What’s a typical technical problem? Perhaps you are using a system that has a documented API interface, and you’d like to pull some data into another document to analyze what’s going on. I was looking at Mockaroo, a tool that lets you design the schema for a set of data (let’s say for Accounts in a fictional customer relationship management system) and potentially link it to a related set of data (perhaps Contacts in a CRM system, or activity points generated by those same contact in another system).

The goal? Generate realistic-looking information that looks like the kind of data people would generate when using an application, and then load that data into Google Sheets for later integration.

Go ahead and try to search for this exact problem solution on Stack Overflow. I’ll wait. I found this answer, which is generally the kind of solution you might want. (I searched “stack overflow load data into google sheets from mockaroo”, if you’re wondering.)

To make the answer work, you’d need to know:

-

About Google Sheets and how you extend these tools by using a language called Google Script (similar to Javascript, but quirky in Googly ways)

-

How to adapt the script provided from Stack Overflow into the use that you wanted

-

How to debug any issues that showed up in the middle of your process.

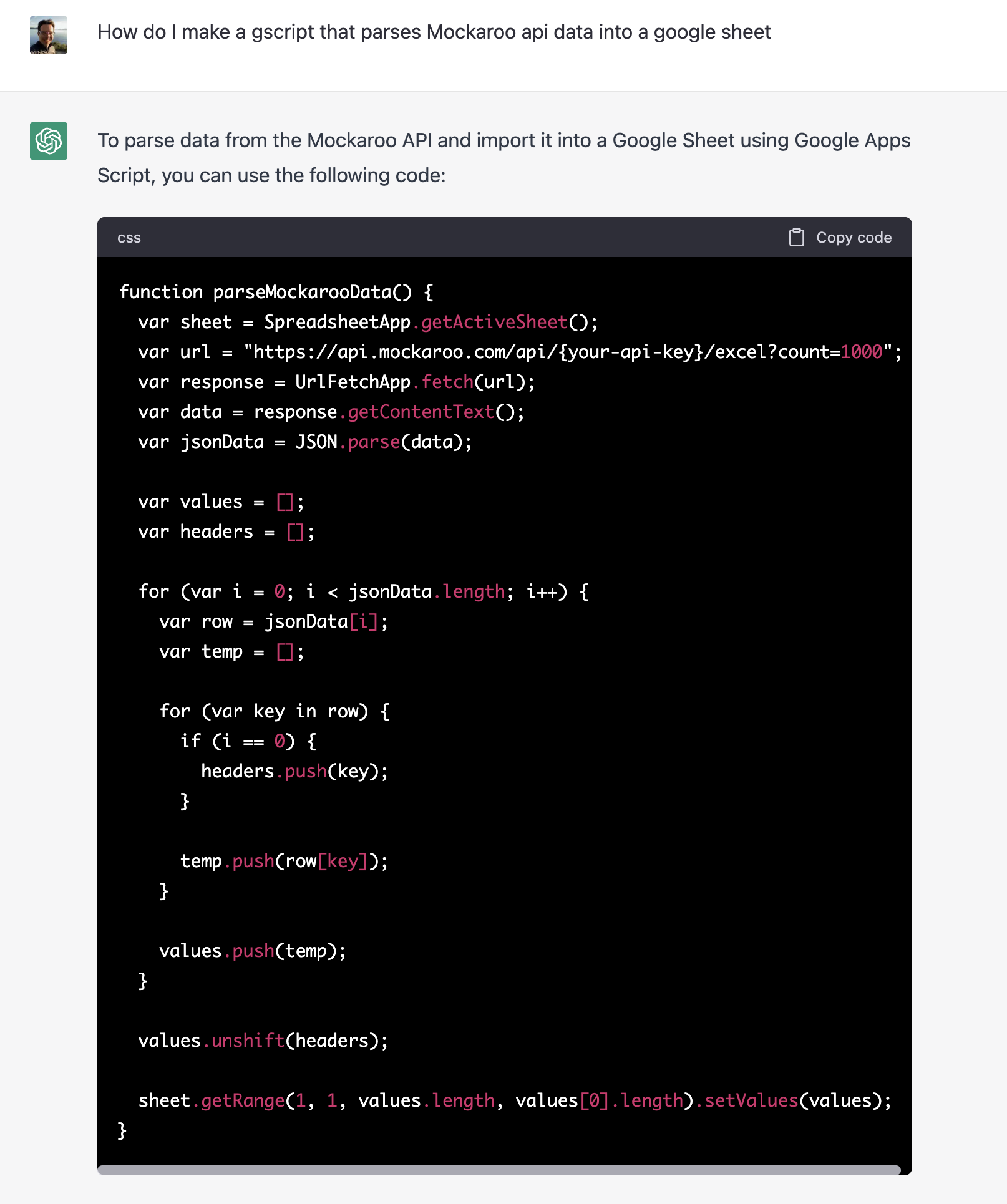

Or, you could ask ChatGPT. Here’s the equivalent in ChatGPT, asking it “how do I make a gscript that parses Mockaroo API data into a Google Sheet?”:

This example works in the following sequence:

-

open a new Google Sheet

-

View Extensions > Appscript

-

Save your project with a new name, and grant it privileges to your Google Sheet

-

Replace the URL in the parseMockarooData() function with a working Mockaroo API value and a key

It works, and it required minimal knowledge to get the job done. If I had questions, I could ask the bot what the code means and how it is solving the problem. In short, I have now gotten access to a teaching tool that can help me learn many common tasks. This is much better than trying to learn it myself without any help, which was the case when I tried to do this with Stack Overflow.

But most people don’t use this sort of code in their regular job functions. They use simpler interfaces that employ point-and-click methods.

Generative learning models are going to change all of that.

How does no-code figure into this discussion?

The first versions of large language models did not include the ability to parse text and transform it into semantic meaning. They could not take a picture of a notebook and turn it into specific actions. The new versions are aware of images.

This means many things for those of us who “don’t code”, including that Image sequences and button clicks (screen recorders, anyone) can now be turned into repeatable process … and maybe even code or working code overlays.

Telling computers what to do and how to do it just became the most important skill anyone can learn.

The future will be won by people who are able to take the problems they are trying to solve, break them down into a series of questions and follow-up questions, and use their language skills to describe a problem for a computer to solve.

This is prompt engineering.

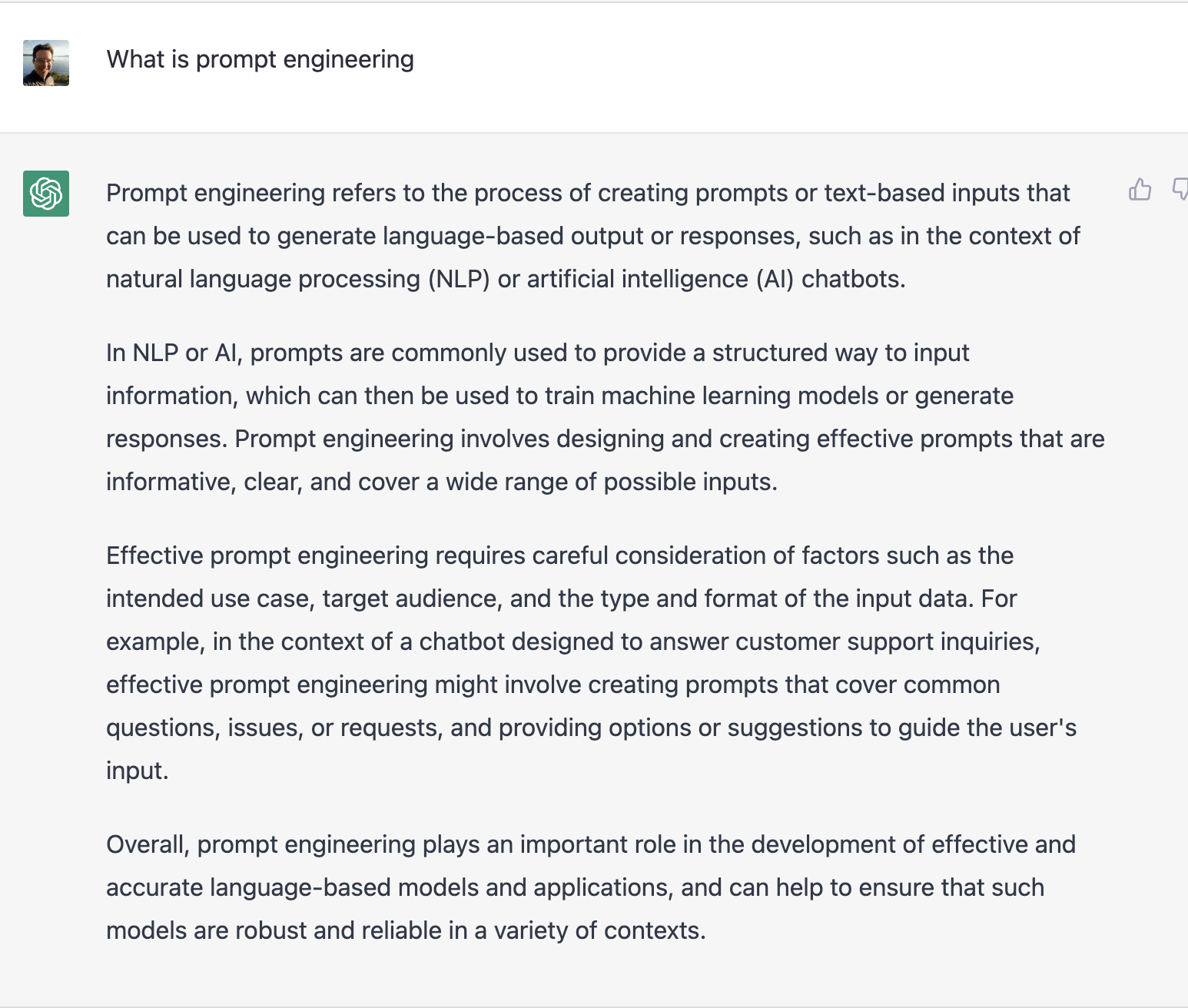

What is “Prompt Engineering”?

Prompt engineering is the process of creating targeted inputs so that a machine can answer your questions and return relevant answers that match the expected answers for a problem. It also refers to the socratic, iterative asking of questions when you are trying to explore an idea.

If you know what you need to know but don’t know how to tell a computer how to do that task, you’re ready to start studying prompt engineering to better translate your ideas into computer speak.

Here’s how ChatGPT defined prompt engineering:

Am I already practicing Prompt Engineering?

You might be training a model today to improve the ability of computers to respond to you if you’re using tools like Notion, Google Workplace, and Microsoft Office 365. These tools are already suggesting next actions, spreadsheet formulas, and other helpful shortcuts. It will take some new tools to get us from solving a math problem to accurately describing “here’s how we make sure that leads go from a web form fill to a seller that needs to handle those leads.”

We all need feedback on how to rephrase the questions we ask of each other so that they also describe the problem accurately for computers. One way to do this is to change our work process by deliberately adding practice to the work.

For example, OpenSpec is a tool that fronts a GPT API call with some targeted questions phrased in the form of a template. Computers, it turns out, do well with templated information that is pretty regular.

If you’re thinking about how to rephrase the work that you ask other people to do so that it’s easier to understand, you’re doing similar work that you would be doing to make it easy for a computer to understand.

There are a few principles to consider:

-

Start with a process you know. It doesn’t have to be a work task. (For example, this PB&J sandwich recipe helps to explain the problem.)

-

Shorter and more concise is better. These models don’t have a lot of memory yet so telling them exactly what to do has a better result.

-

Think of your process as a series of connected steps, with logical gates that would let you know whether to proceed or not.

-

Phrase your inputs sequentially, so that the model can adapt sequentially.

-

Be open to asking simple questions, like “why is that happening? or “explain this part of the process” or “can you simplify this?”

How would you improve our Google Script example?

The example we used at the beginning of this essay was a first draft.

What things might we do to improve it and make it more resilient? Because this is going to be used in a Google Sheet, we might want to do the following:

-

If the sheet already existed, append to it

-

If there is data there already, don’t rewrite the header row every time

-

Make it modular and easy to add another API that might create another tab in our sheet

You can ask ChatGPT to do all of these things. As a side note, I wouldn’t recommend sharing anything confidential with it until there are assurances that copies of a large language model like this one can be run in confidence in your own environment.

What’s the takeaway? Generative transformers and large language models are making it increasingly important to learn how to phrase questions to computers. Adopt the idea of “Prompt Engineering” to ensure better outcomes for complex process.