It’s the problem that everyone knows. A customer request gets lost between sales and support. A bug report sits in the queue because engineering never got the full context. A project deadline slips because the handoff between design and development missed a critical detail.

These aren’t just annoying moments. They’re process failures that create rework, missed deadlines, and frustrated teams. Work transitions between teams are our biggest bottleneck, and most of the time, we don’t even know they’re failing until we’re already dealing with the consequences.

The invisible intelligence gap

We have better visibility into our code than we do into our processes. Engineering teams track every commit, every deployment, every bug fix. When a build breaks, they might not know exactly what went wrong, but they get a signal that they need to investigate before they can move on to other tasks.

We’re missing an analog to the handoffs that happen between teams and describe the work of getting things done. What if we designed our handoffs to be measurable and observable from day one, just like we create continuous delivery processes for code?

In a perfect world, we’d be able to watch, learn, and catch errors before they become problems.

It turns out that AI is pretty good at identifying true/false conditions from ambiguous input and many handoff conditions look just like that. Imagine knowing that handoffs between engineering and ops are failing 30% of the time, and having AI suggest specific improvements based on what works.

This might mean something as simple as tracking an engineering request for wait time, or it might mean reengineering a bug reporting process from end to end. It’s about treating our operations like we treat our code: measurable, observable, and continuously improvable.

The promise of incremental improvement

The future of team handshakes involves giving teams the intelligence they need to catch errors before they become problems, with AI as a silent partner that helps prevent the small failures that add up to big headaches.

The fundamental problem with team handoffs isn’t that they fail. The issue is that we don’t know about problems until after they become big problems.

Here are some typical causes for our process blindness:

Context gets lost in transitions. When work moves from one team to another, critical information often gets filtered out. The receiving team gets a sanitized version that misses the nuance, the edge cases, or the historical context that could prevent problems.

We have no baseline for what “good” looks like. Without observability built into our processes, we can’t measure handoff effectiveness. We don’t know if a 2-day handoff is fast or slow. We can’t tell if the information being passed is complete or missing key details.

Teams work in silos, missing patterns. When handoffs fail, each team blames the other. Sales thinks support dropped the ball. Engineering thinks QA is being unreasonable. Without data, we’re stuck in a cycle of finger-pointing instead of process improvement.

Human memory is fallible. We forget what we learned from last month’s handoff failure. We don’t remember which combinations of factors led to success. Every handoff becomes a fresh experiment instead of building on past learnings.

We treat handoff failures as inevitable rather than preventable.

Some parallels from engineering

Good development teams build observability into their processes to know when they hit an error. The goal is to see exactly when something breaks and why.

When a deployment fails, engineering teams investigate. They look at the logs, trace the execution path, identify the root cause, and fix the underlying issue. They treat every failure as a learning opportunity.

But when a process handoff fails, what do we often do?

We blame the other team. We assume it was a one-off problem. We move on without learning anything. We don’t have the data to do anything else.

What if we treated our operations like engineering teams treat their code?

What if we built observability into our handoffs from the start, so we could see exactly when and why they fail? That sounds like a better recipe for compounding success.

Building observability into team handoffs

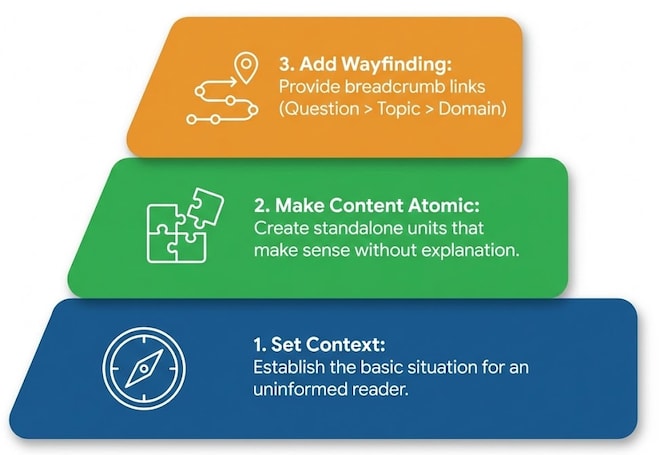

The key insight is that we need to design our handoffs to be observable from day one. Instead of trying to add monitoring to existing broken processes, we need to build processes that are measurable and improvable from the start.

What we need to track

-

Expected vs actual sequence: What should happen vs what actually happened

-

Time delays and bottlenecks: Where work gets stuck and why

-

Context preservation: How much information makes it through the handoff

-

Success/failure patterns: What combinations of factors lead to good outcomes

How to implement it

-

Event logging: Capture every handoff moment with structured data

-

Real-time monitoring: Watch handoffs as they happen, not after they fail

-

Pattern recognition: Use AI to identify what works and what doesn’t

-

Continuous improvement: Feed insights back into process design

We want to create better processes that are transparent, measurable, and continuously improvable.

How can AI help with process observability?

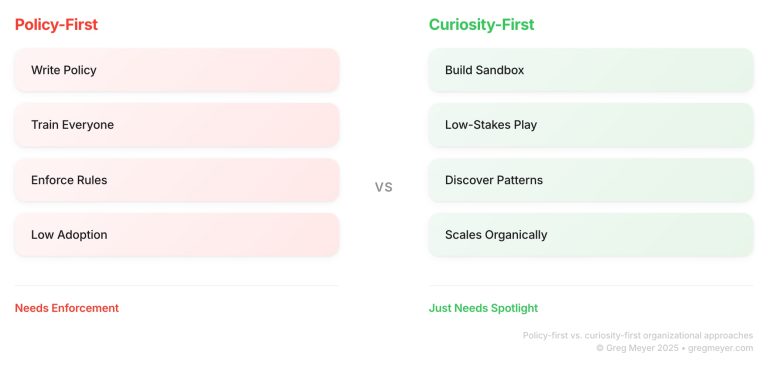

Without the context of the process, AI will deliver a middling result when you ask it to analyze why something failed during a team handoff. AI systems (like humans) need to see a pattern to start to know what to look for.

But AI is particularly good once there is a template.

-

Context awareness: AI can understand the full context of what’s being handed off, including historical patterns and team preferences.

-

Risk assessment: AI can identify potential handoff failures before they happen, based on patterns it’s learned from historical data.

-

Process optimization: AI can suggest improvements based on what’s worked in the past, helping teams iterate toward better handoffs.

-

Real-time feedback: AI can provide immediate insights during handoffs, helping teams catch issues before they become problems.

AI provides the insights gleaned from lots of data crunching so that teams do a better job with the next steps after they know what’s going on.

How do we make this real?

Let’s walk through how observability could transform a real ops scenario.

The Problem

Customer issues get escalated from frontline support to engineering, but critical context gets lost in the handoff. The engineering team gets a vague description like “customer can’t log in” without the specific error message, browser version, or steps to reproduce. The issue bounces back and forth while the customer waits, and the support team has no visibility into what engineering actually needs.

Building Observability

The team starts logging every escalation handoff with structured data:

-

When the escalation happened and how long the customer had been waiting

-

What information was included in the initial handoff (error messages, browser details, reproduction steps)

-

How many back-and-forth cycles occurred before resolution

-

Whether the engineering team had to ask for additional information

-

Total time from escalation to resolution

AI Pattern Recognition

Once there is enough data to analyze, AI identifies clear patterns: escalations with specific error messages and browser details are resolved 4x faster than those without. Issues escalated during business hours are resolved 2x faster than after-hours escalations. Support teams that include customer impact details get faster responses from engineering.

The Team’s Response

Instead of guessing what engineering needs, the support team now has data. They create a simple escalation template that requires error messages, browser details, and customer impact. They start tracking which support agents consistently provide complete information and which ones need coaching.

Measurable Outcome

Within a month, the average escalation resolution time drops from 8 hours to 3 hours. The number of back-and-forth cycles drops by 70%. Customer satisfaction scores improve because issues get resolved faster, and support agents feel more confident because they know what information to collect.

These numbers are imagined, but you can see exactly how you can make them real.

The team can see exactly what works and why, then build those patterns into their process.

Getting started … one step at a time

The good news is that you don’t need to rebuild everything at once. Start with one critical handoff process and build observability into it from the start.

Pick a high-impact handoff: Choose a handoff that’s causing the most problems or affecting the most people.

Design for observability: Build measurement and monitoring into the process from day one, not as an afterthought.

Start logging events: Capture the data you need to understand what’s working and what’s not.

Look for patterns: Use AI to identify what leads to successful handoffs and what doesn’t.

Iterate and improve: Use the insights to make your processes better, then measure the improvement.

The key is to start small and build momentum. Every handoff you make observable is a step toward better team coordination.

What’s the takeaway? The teams that improve their handoff observability will have better processes, happier teams, and more reliable outcomes. They’ll be the ones setting the standard for how teams work together in the age of AI.