Apple announced the release of Apple Intelligence at their World Wide Developers conference this year. Besides the portmanteau of being able to say they had a new release of AI, Apple made a really smart move that software makers should pay attention to with this release. They provided a walled garden approach to a new feature and enabled that to use AI.

Wait – this is a blog about data and operations (and frequently integration and automation) – why are you advocating a walled garden approach to AI?

I’m not saying it’s a good strategy for individual app developers but it absolutely is a desireable benefit for many consumers who haven’t yet used AI beyond perhaps trying a chatbot interface through Bing or OpenAI or a simple image manipulation.

What do buyers want?

I believe software purchasers want simple outcomes:

-

Make it work

-

Give me something new

-

Don’t make me think too hard about what I’m getting and how it will change what I’m doing today

This sounds like a paradox, but is inline with my favorite article on the subject, Gourville’s Eager Sellers and Stony Buyers: Understanding the Psychology of New Product Adoption (2006). Gourville writes:

Many products fail because of a universal, but largely ignored, psychological bias: People irrationally overvalue benefits they currently possess relative to those they don’t. The bias leads consumers to value the advantages of products they own more than the benefits of new ones. It also leads executives to value the benefits of innovations they’ve developed over the advantages of incumbent products.

Buyers are overwhelmed by choice and overweight the value of the tools they have (even if they are relatively simple compared to the competition). Sellers believe that buyers will easily see the benefit of something new and underweight the resistance to change.

A simple example: using AI to create an image

Today, I wanted to edit an existing image to demonstrate possible design choices for an event, starting with the image of a hotel ballroom as below.

I wanted the ability to show some mockups or wireframes in a room of the same dimension, removing some aspects of the existing photo while in-painting on top of the layer of this photo. This is similar to what you would do in an image editing tool to demonstrate an example.

ChatGPT created a few examples, but failed at the intent of the prompt.

I tried some other services and got similar results:

-

OpenAI took the picture and gave back an interpretation rather than an overlay

-

Claude refused to do anything

-

An number of other interior design apps promised overlay designs and produced similar results to ChatGPT (probably because they are GPT wrappers in disguise)

In short, AI tools were not able to transform my basic intent into a usable prototype because they don’t work that way. The GPT transformer process “looks” for a next best matching token and the current generation of tools don’t allow you to prompt: “produce a transparent layer that can be composited over the current image using the same scale so that I can get an idea of what this new image would look like in the same space.”

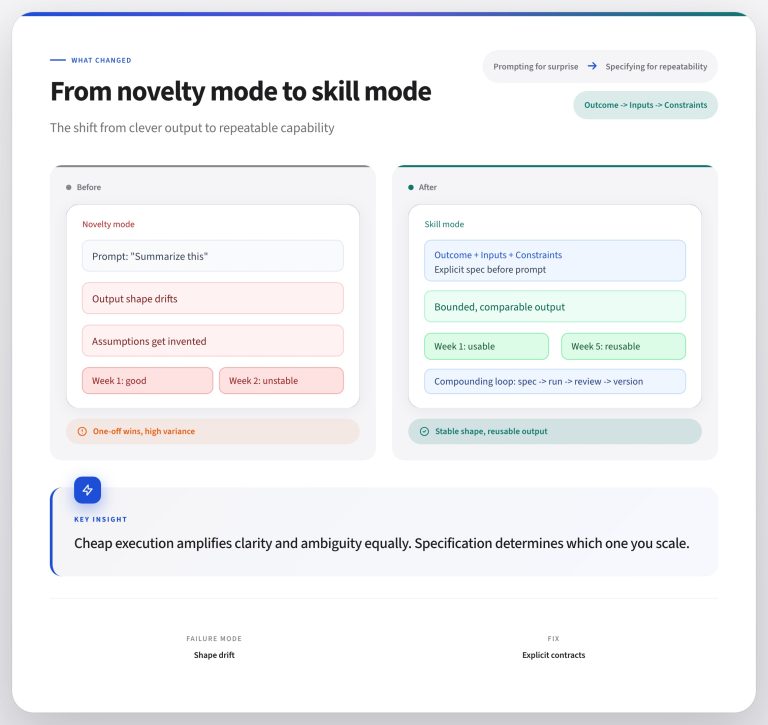

What they do: put lots of images in a blender and start rendering a result that is mathematically likely based on the pixels nearby. It’s kind of cool but not predictable or repeatable.

The image manipulation does not have tools to constrain the scale or style like a mature product such as Photoshop/Creative Cloud – I don’t expect it to yet, but I’m surprised that AI teams have not built power tools to address this gap.

What did I need vs what did I want?

None of these tools added a layer to ask me what kind of a solution I was seeking. A little bit of extra discovery (are you looking to build a new image or overlay an existing one) would have clarified my intent.

For today’s AI, this was a bit of a challenging product problem. I needed to have some transparent assets that I could arrange in space in an interior design fashion. I wanted to have the magic genie solve the problem for me. Neither of these solutions were available.

Solving this problem required two features that most of these AI tools don’t offer yet:

-

Inpainting within an area of the image, or keeping the current dimensions of the image while allowing for image generation based on a prompt. This “generative fill” could take a prompt and fill a portion of the image, e.g. “replace the stage in this photograph with a game show set”

-

Create an overlay layer as a transparency showing in wireframe style what could exist in the space, while retaining a low level of opacity to show both old and new image in the same space.

Another reason these tools don’t support this kind of fine-grained editing is that it could also be used for other kinds of image manipulation, probably the kind that would land them in legal hot water. But perhaps there’s a way – once you prove you own an image – to use tools like this to quickly morph your pictures or to label them to they are identified as AI-created.

What’s the lesson for product builders?

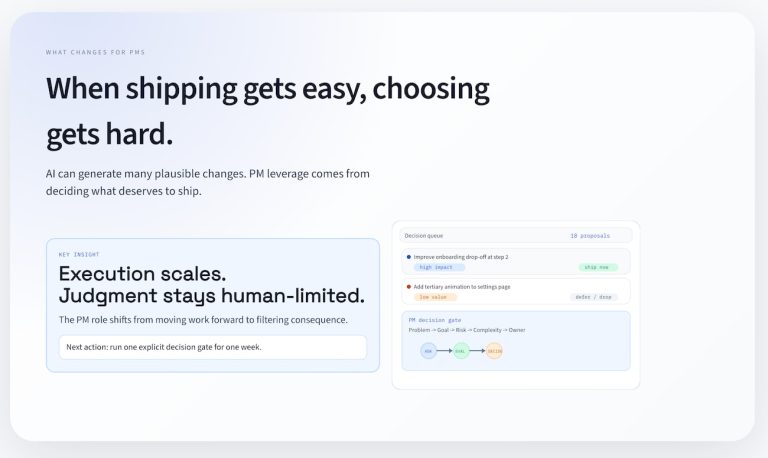

It feels easy initially when there is a feature that states it will solve your problem. (In the last few months, it’s likely been associated with a sparkle emoji ✨ rather than specific product promises.)

From the user’s perspective, of course we want the software to “handle it” and produce a magic result but there’s a big opportunity for disappointment too when you get a weird or non-sensical answer to your question. It’s even worse when the feature delivers progressively worse results the more you try, which is a path that’s possible with generative tools.s

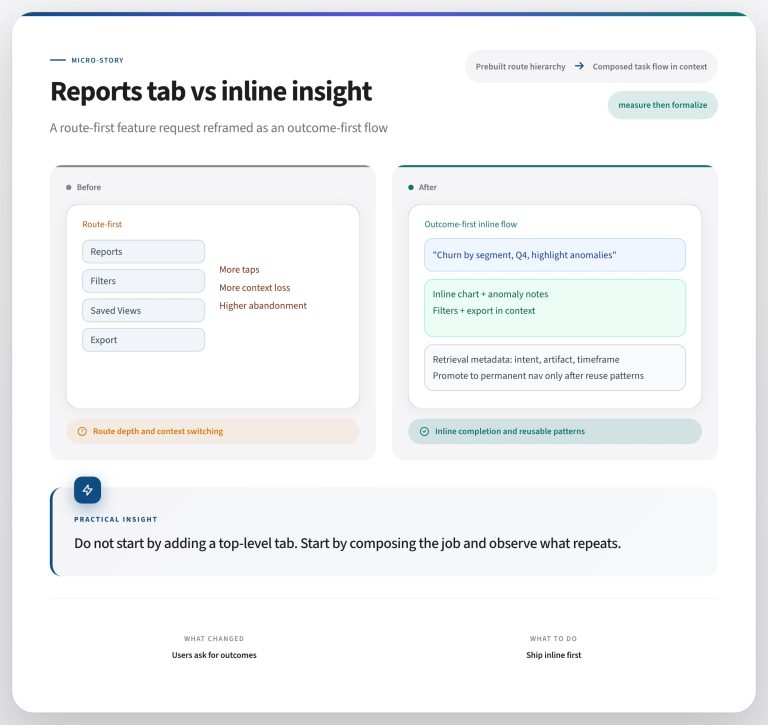

Product builders can indeed build magic features, but they feel more magical when they deliver exactly what’s asked for, or constrain the unexpected result to an area of the problem that the user controls.

Apple Intelligence is a masterclass in marketing and product precisely because it defines something that’s brand new – a gateway to AI where some things can go to the magic box – and links it thematically to the hype and promise of the impressive thing without promising boffo results. Non-Apple users will scoff at the walled garden, and many Apple users will believe they are getting AI on their terms, which is exactly what and how they expected it could behave.

The product space of secure, local, limited, and fast AI with a cloud-based path to more and harder questions is a great product moat for Apple to build.

What’s the takeaway? Pick a small product surface and make it work well. It’s better to have a “boring” product that occasionally produces magical results than a sparkly AI product that constantly produces disappointment.