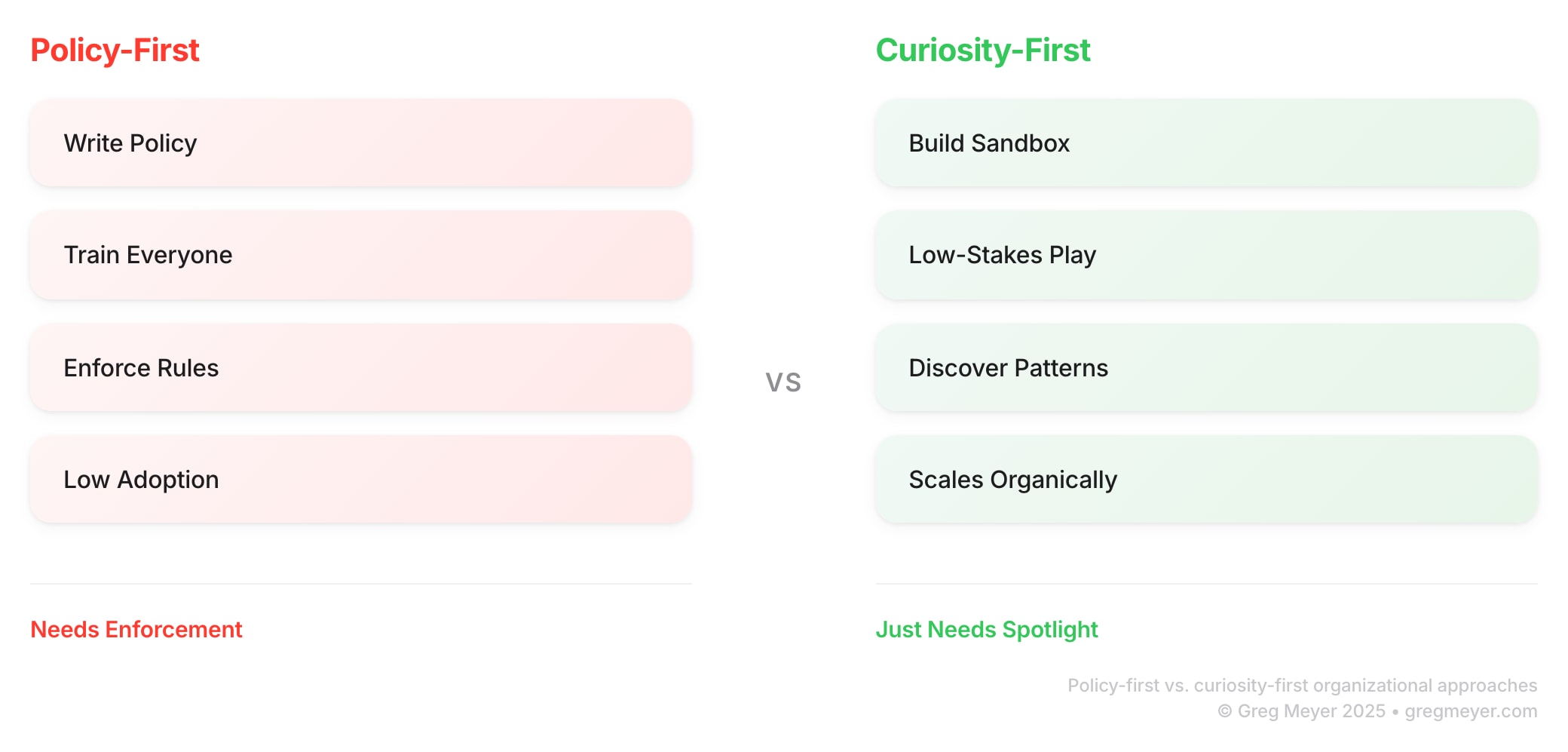

Have you tried to roll out an AI program at work? I think traditional policy-first approaches to AI adoption fail because they prioritize control over curiosity. The pockets with most success start with safe experimentation spaces where people can learn AI’s edges through low-stakes play instead of telling them exactly how to use the new tools.

Here’s the spoiler: because of the weird combination between human brains and the interactive token-tumblers we call LLMs, we’re often not exactly sure what’s going to happen in a given interaction. Sure, we can overfit an outcome to be deterministic or use pydantic models to ensure we get a known output. But some of the “creativity” we’re seeing in our experiments comes from letting the AI cook.

When you hand someone a policy document, you’re asking them to memorize rules. When you give them a sandbox, you’re inviting them to discover patterns. That difference between memorization and discovery is why curiosity scales faster than policy.

What are some obvious reasons for this discrepancy?

-

Compliance needs enforcement.

-

Curiosity just needs a spotlight.

-

And once curiosity becomes capability, policy can catch up.

How do we make AI tools easier to try?

Your AI rollout needs a sandbox, not a strategy deck.

AI is a force multiplier for teams that learn its edges early. And it’s not a magic bullet. It’s a tool that you need to learn how to use to understand its edges and the things it absolutely fails to do.

Your first experiments should have low stakes but high learning value.

They should teach you how unpredictable AI can be. The learning outcomes you’re seeking are how to rein it in, guide it, and eventually make it behave.

You’ve got to feel the weirdness before you can tame it.

If you’ve never pushed an LLM until it breaks, you don’t really know how it “thinks”.

The good news: you can learn that safely.

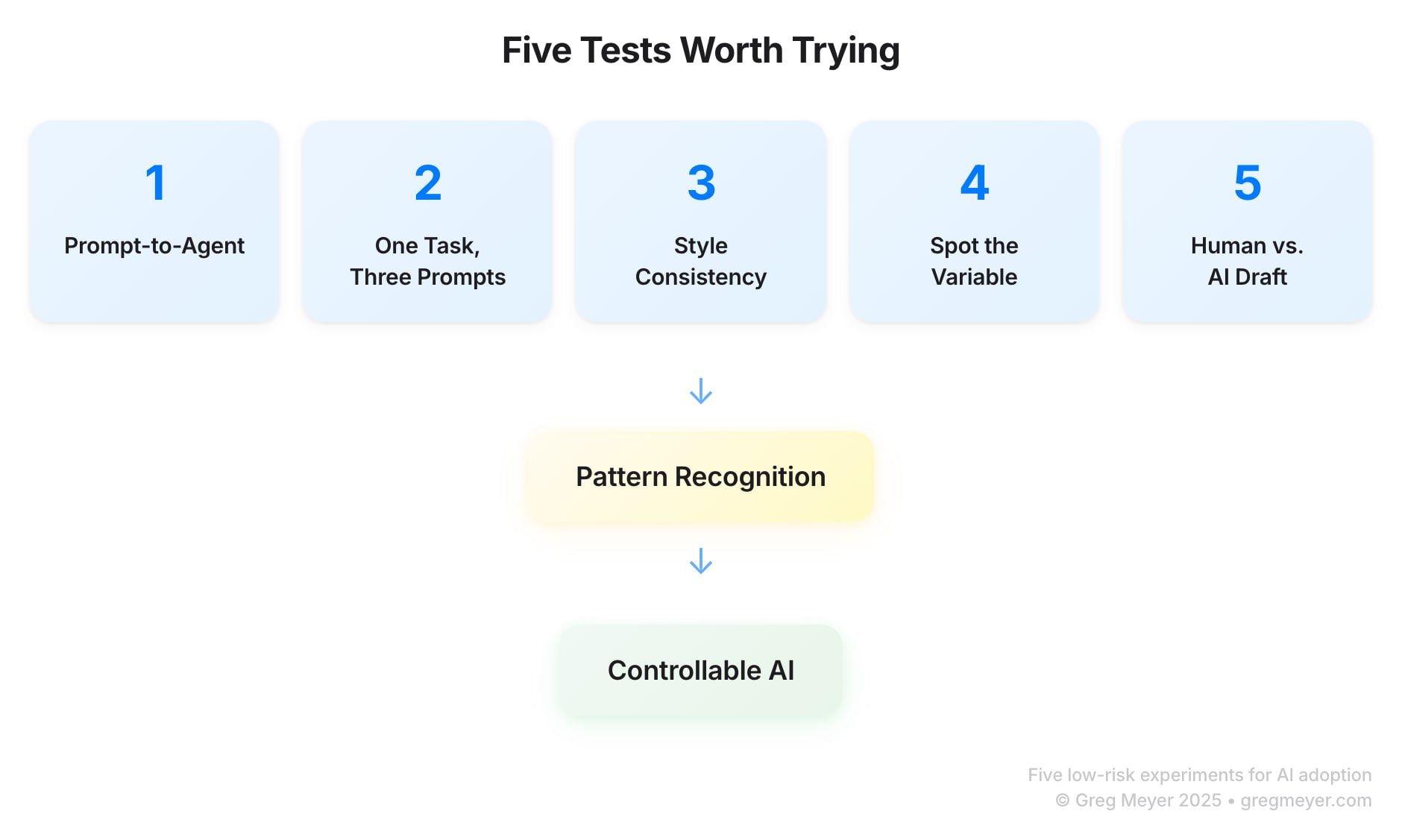

Here are five experiments simple enough to run on a coffee break, but rich enough to show you how AI actually behaves.

Five tests worth trying

Low risk, high learning. Each one teaches you how AI behaves — without touching production data.

-

Prompt-to-Agent Test

Ask an LLM to write the prompt you’d give to an AI agent to solve a problem.

→ Learn how models interpret goals and structure instructions. -

One Task, Three Prompts

Run the same task three ways — summarize a Slack thread, write an email, etc.

→ See how phrasing changes output and what makes results more deterministic. -

Style Consistency Drill

Have AI rewrite your text in your team’s tone.

→ Learn how system prompts encode voice and context. -

Spot the Variable

Feed similar inputs (e.g., meeting notes) and track what drifts in the output.

→ Understand which parts of the model’s behavior are stable vs. random. -

Human vs. AI Draft

Write something yourself, then generate it with AI. Compare.

→ Discover where AI accelerates vs. where it still lacks context.

The goal isn’t perfection but pattern recognition.

Each of these tests shows you how controllable AI can become once you learn its quirks.

But most organizations don’t have a place where you can experiment like this.

You don’t have an AI playground yet

When organizations treat AI adoption like a compliance exercise, they are seeking to control behavior and avoid risk. That leads you to write a policy, train everyone, and enforce the rules.

There are clearly some important reasons why you need to make sure that using AI doesn’t break your current policies and rules.

But policies control behavior and don’t inspire creativity.

Adoption starts with curiosity. People need to touch the thing, break it, and see what happens when they do. Trust for AI forms in low-stakes moments where mistakes are reversible and learning is visible.

What looks like fear is usually just missing infrastructure for safe failure.

Most of the best stuff I’ve seen started because someone was messing around after hours.

Build a place to play, then write policy

So here’s a proposed fix to help everyone get more curious. If you want people to embrace AI, give them a place to play.

Start small and visible with gestures that say “it’s safe to explore here.”

-

Spin up an #ai-playground Slack channel for quick wins and funny failures

-

Run a Prompt of the Week mini-challenge

-

Let early adopters show off their messy experiments

-

Leaders should post their own prompts that didn’t work and what they learned.

When a manager says, “I’m still figuring this out too,” everything shifts and it becomes a collaborative game or healthy competition to show off some cool stuff.

Moving from “AI-curious” to “AI-capable”

Curiosity doesn’t scale organically. Here’s the part no one likes to talk about: sometimes, no one shows up.

You post a calendar invite for “AI Sandbox Hour,” and the same three people show up. Everyone else is “heads down,” in another meeting, or don’t know what to do yet.

It’s more fun to be redirected when you’re already in flow.

People are avoiding AI because the entry point to “do this with AI” feels like another meeting and that it’s another thing they might do wrong.

You start getting signal when the work crosses the line from interesting to useful. When people see five minutes saved, one click automated, a small thing feels big.

Momentum comes from seeing someone else win.

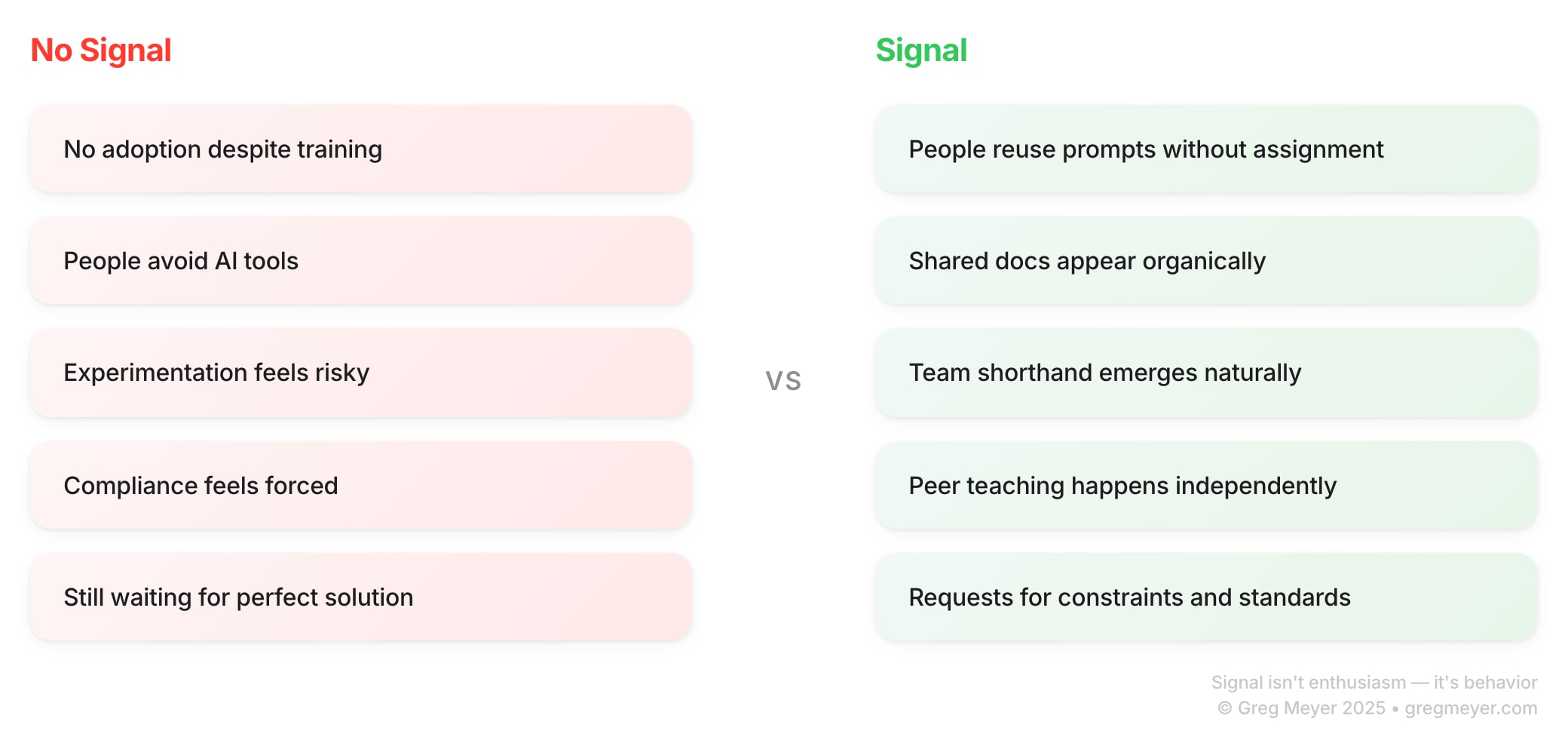

Signs you’re seeing good signal in your org

You’ll know curiosity is turning into capability when the system starts talking back.

Signal looks like:

-

Someone reusing or improving a prompt you didn’t assign.

-

A shared doc of “AI wins” that appears without permission.

-

People saying things like “run it through the bot”

-

A teammate teaching another how to do it, and you hearing about it after.

-

Requests for constraint: “What’s safe data to use?”

Those are inflection points where curiosity has crossed from play into process.

When you see those signs, capture them fast. Name them.

Give the behavior a handle: “Prompt Lab,” “Shortcut Series,” whatever fits.

Naming makes it real, and real things scale.

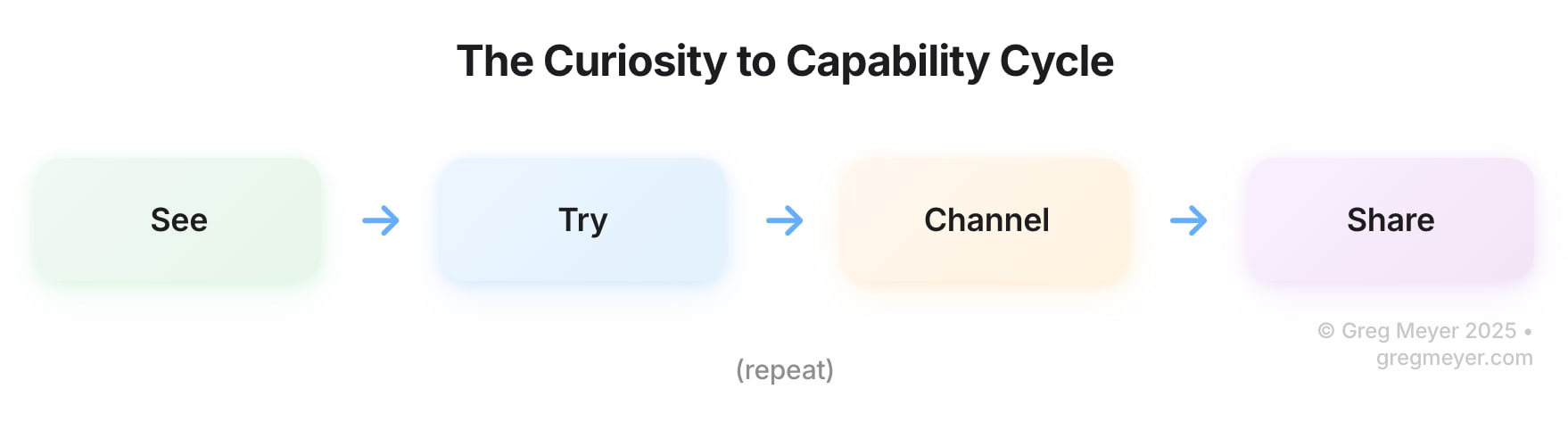

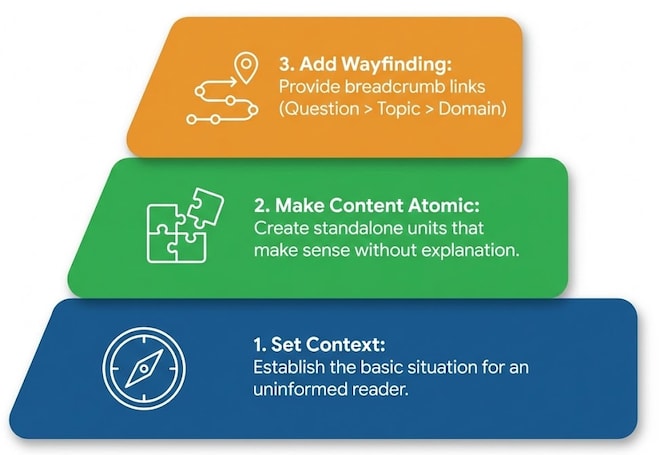

A roadmap from AI-curious to AI-capable

Here’s the pattern I’ve seen work best:

See → Try → Channel → Share

See what others are experimenting with.

The goal here isn’t to act, but to notice what’s possible.

Which tasks are people quietly using AI for? Summaries? Formatting?

You’re learning the shape of useful.Try something low-risk.

Run your own test where failure costs nothing.

Ask an LLM to rewrite a meeting summary, or have it generate a prompt for an internal agent.

What does “good enough” look like for my workflow?Channel what you’ve learned.

Once you’ve seen the range of output, tighten it.

Add structure, context, or examples until the AI behaves predictably.

How do I make this reliable enough to trust?Share what worked.

Post the prompt. Record a short Loom. Drop a screenshot in #ai-playground.

Does this spark adoption in someone else?

Each loop teaches the team how to balance creativity with control — the exact muscle they’ll need when AI touches production work.

Why curiosity scales

A single AI policy might keep you compliant, but it won’t make anyone curious.

Curiosity spreads one shortcut at a time. Somebody finds a trick that saves them ten minutes, someone else copies it, and before long the behavior becomes culture.

Build the place where it’s okay to screw up.

Curiosity’s messy. So is learning. If you get that right, the policy, polish, and predictability will follow.

Curiosity still scales faster than policy.

What’s the takeaway? You don’t teach someone to swim by handing them a manual. You toss them into the shallow end, cheer when they splash, and celebrate when they try again. Learning how to AI in the workplace is a lot like that effort. Build the place for people to play and they’ll do more of that.